A new study claiming that Overweight and Class 1 Obese people have a lower mortality rate has been bouncing around the world since Thursday. National Public Radio’s report seems to be the most comprehensive but hints at the two most extreme, polarized viewpoints:

A new study claiming that Overweight and Class 1 Obese people have a lower mortality rate has been bouncing around the world since Thursday. National Public Radio’s report seems to be the most comprehensive but hints at the two most extreme, polarized viewpoints:

Cosmetic: This is a victory for the overweight—now we can trash skinny people (again)!

Medical: If people hear about this, everyone will stop exercising and eating their vegetables and then everyone’s going to die!

Both views treat the public like infants who can’t possibly think for themselves.

Doctors are right to worry that a sizeable portion of the population will use this news as an excuse for whatever unhealthy habits they love. This is why it is important to include the many possible factors skewing the results. But many people will always cherry-pick whatever statistics suit their lifestyle or claim to be the exception to the rule. I don’t have any political solutions for engaging with contrarians—whether we’re debating eating habits or global warming—but talking down to them and using scare tactics has a pretty high failure rate.

And from the disability rights perspective, there are exceptions to the rule when it comes to health. Thousands of them. As said before, a round belly is not always a sign of fat. A bony body is not always a sign of an eating disorder. Many forms of exercise can be more hazardous than beneficial to people with certain conditions. And many life-threatening conditions are invisible. Medical tests, not appearance, are always the most reliable indicators of health. This robs us of the easy answers we crave and which facilitate public debate, but there has never been and never will be a one-size-fits-all health program for the 7 billion humans on the planet.

You and your doctor know better than anyone else if you are healthy or not. If she says you are overweight but your genes and cholesterol levels put you at no risk for heart disease, she’s probably right. If she says your weight is ideal but your eating habits put you at risk for malnutrition, she’s probably right. And if her advice seems sound but her delivery makes you feel too ashamed to discuss it, go find someone with better social skills to treat you. At the individual level, it’s no one else’s business. Outside of the doctor’s office, it shouldn’t be any more socially acceptable to discuss someone else’s weight or waist size than it is to discuss their iron levels, sperm count, or cancer genes.

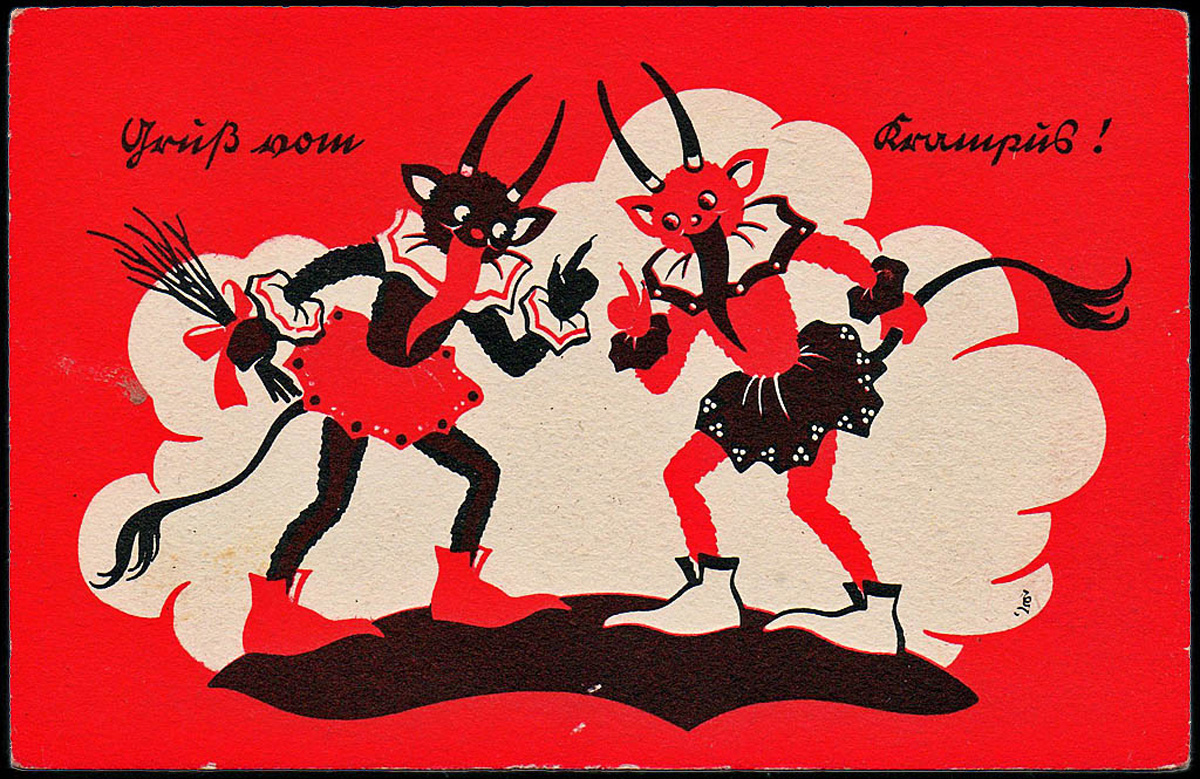

But beauty standards and health trends often go hand-in-hand. And what really needs to go is the lookist idea that we’re all semi-licensed doctors who can diagnose people just by glancing at them and deciding how they measure up according to the latest medical research. The reason we have a hard time letting this go is because it’s fun to point out others’ supposed weaknesses. It’s self-elevating and validating to snicker that ours is the better body type because it calms our insecurities. Beauty standards are cultural and constantly morphing throughout history, but they have always remained narrow. (This is especially the case for women, though I sincerely apologize for not providing more research on men.) Whether fawning over big breasts or flat tummies, public praise for certain body types has almost always been at the expense of others:

After decades of the Kate Moss heroin chic, Christina Hendricks (see above) of Mad Men has garnered lots of attention for her curves and this week’s study is likely to encourage her fans. “Christina Hendricks is absolutely fabulous…,” says U.K. Equalities Minister Lynne Featherstone. “We need more of these role models. There is such a sensation when there is a curvy role model. It shouldn’t be so unusual.” She is dead right that it shouldn’t be hard for curvy women to find sexy heroines who look like them in film and on television, just as skinny women or disabled women or women of any body type shouldn’t have to give up on ever seeing celebrities with figures like theirs. But “Real women have curves!” is just as exclusionary as the catty comments about fat that incite eating disorders. And when Esquire and the BBC celebrate Hendricks as “The Ideal Woman,” they mistake oppression for empowerment.

We can accept the idea that people of all sorts of different hair colors and lengths can be beautiful. Will mainstream medicine and cosmetics ever be able to handle the idea that all sorts of different bodies can be healthy? History says no. But maybe it’s not naïve to hope.

And what does Christina Hendricks have to say about all of this? “I was working my butt off on [Mad Men] and then all anyone was talking about was my body.”

Touché.